[0.13] Flink upsert delete file metadata backports#4786

[0.13] Flink upsert delete file metadata backports#4786rdblue merged 4 commits intoapache:0.13.xfrom

Conversation

|

@kbendick could you please double-check if there are any other Flink changes that need to be backported? |

|

|

||

| @Override | ||

| protected StructLike asStructLikeKey(RowData data) { | ||

| return wrapper.wrap(data); |

There was a problem hiding this comment.

@kbendick should this throw an exception like in

or does it even matter what we return here for Flink 1.12?There was a problem hiding this comment.

I would keep it this way upon initial inspection.

It needs to be implemented for the API and it will possibly get called. Keeping it the same as asStructLike seems safest for backwards compatibility.

34edff3 to

275dce6

Compare

kbendick

left a comment

kbendick

left a comment

There was a problem hiding this comment.

+1. I wanted to take some time to verify some of the changes in core didn’t cause issues because we didn’t backport.

TLDR is that it shouldn’t (and we’re emphatically alerting people to upgrade anyway).

|

The test issue looks like a github problem. I tried to cancel, but it looks like I can't right now. I'll check back in a few hours. It should time out. |

|

Yes the job is timing out but it's unclear why because the run itself doesn't show any output. Locally it helped to increase the Heap size for Gradle (since the build didn't progress as well locally) |

|

So GH Actions use the settings in master for security purposes. Mayhe merging in the heap increase first will help? I doubt the tests are running with the heap increase if it’s in this PR. Just a guess but the tests didn’t complete without increased heap for me as well. Also, since this is a release branch, feel free to make the heap slightly bigger and/or tune the forked JVM’s GC settings in the settings.gradle (or wherever). But I think running with the heap settings already merged in will help the most. I think you can try it in your fork if you run the tests there. |

|

Oh and the other thing would be to add class unloading into the test JVM settings. I helped tune the JVM for CI in Spark and class unloading, a fixed MaxMetaSpaceSize (to force class unloading), and somewhat larger heaps helped quite a bit for a situation that had gotten really out of hand. Also my only meaningful contribution to the Spark repo and the credit got misattributed as I never added that email to my GitHub 😂 But class unloading really helps due to Flink’s inverted class loader. And out JVM prior to this change (and even still really) is very small in my opinion. Again, since this is a release branch and not one we’ll work off of ever again very likely, feel free to be liberal in updating the JVM for test purposes! They just need to run enough to make the release! |

|

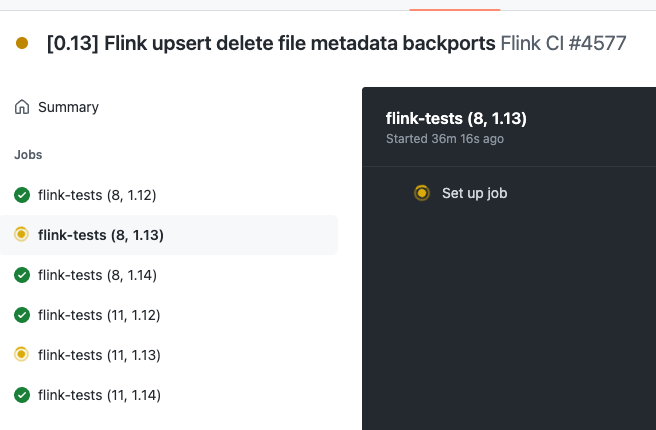

The only other issue I can think of is a GitHub issue with the JVM cache as the problem is sticky for Flink 1.13. But the most recent attempt did run somewhat so I don’t think that’s it. I think it’s just poor memory utilization / availability combined with some somewhat heavy Flink SQL upsert tests. |

Co-authored-by: liliwei <hililiwei@gmail.com>

Co-authored-by: liliwei <hililiwei@gmail.com>

… one checkpoint cycle (apache#4189)

275dce6 to

5ea6b7f

Compare

|

I think I found the issue (which can easily be debugged locally by adding |

+1 to better timeout resolution. It's possible there's something else we need to cherry-pick given the the underlying exception for that one test case. I'll run locally and we'll see. We can also try to schedule time to talk if you'd like. I'm very interested in getting this out the door. Though tests do seem to be passing now so potentially you already got to it. 🙂 |

This backports #4417 / #3834 (because #4364 depends on it) / #4364 / #4189 (because CI was failing without this test fix) to the 0.13.x branch